SQUID Digest

Can news sentiment predict crypto price movements?

Can news sentiment predict crypto price movements? We’re finding out in real-time, transparently, with full backtest results published daily right to your inbos.

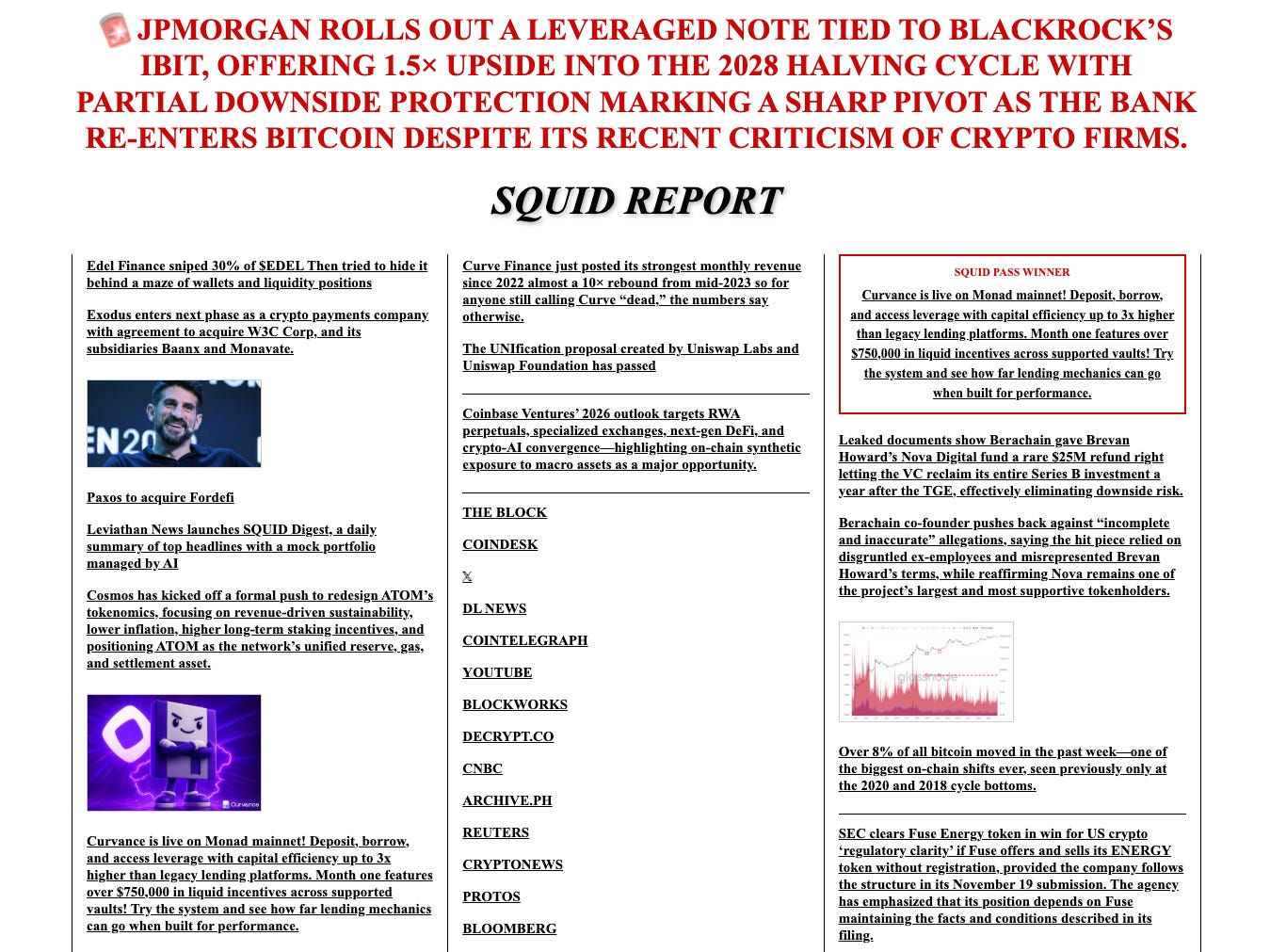

Here’s something for you to digest this holiday weekend… Leviathan is thrilled to announce the launch of SQUID Digest. This new daily newsletter, hosted via Ghost, represents an ambitious project months in the making.

SQUID Digest resolves several longstanding problems within one elegant solution:

News you can Use: Many crypto followers suffer burnout from the realtime nature of crypto. We aimed to build SQUID Digest to provide a summary of only the most important news updates from the past 24 hours... but how to reliably separate signal from noise?

AI Without the Slop: We’ve long wanted to incorporate AI into our processes, but how could we actually use AI to create an engaging newsletter, given that AI writing remains nearly unreadable?

Trading Signals: Many of us follow crypto news to inform our trading habits, but how can we help this audience when we’re bad traders ourselves and won’t ever offer financial advice?

We’re proud to give you a quick tour of our solution.

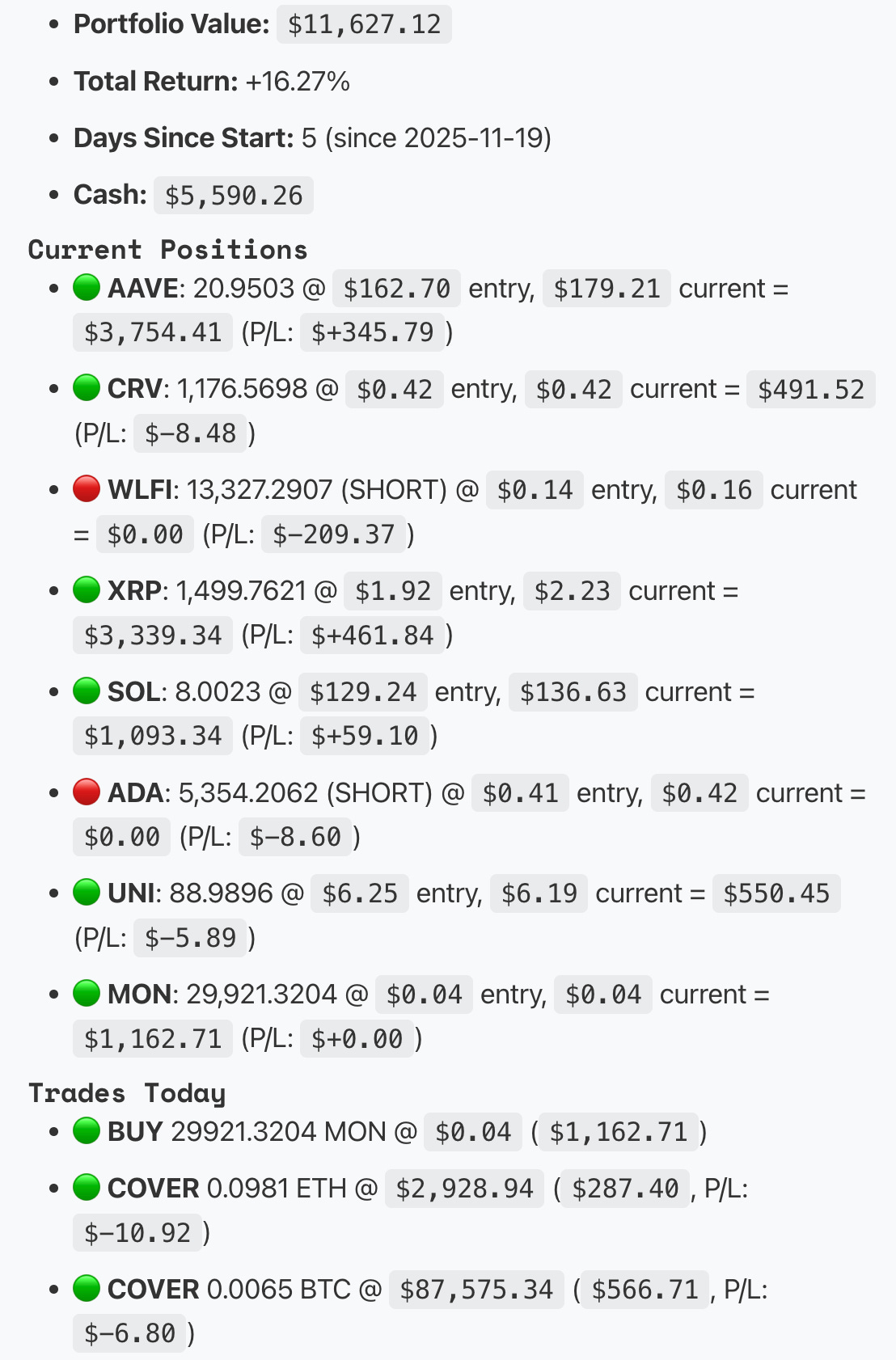

Each day, SQUID Digest automatically scrapes the previous 24 hours of headlines. We feed them into Perplexity and use these headlines to manage a fictitious $10,000 portfolio. Our AI automatically researches the headlines, generates trading signals, and manages its portfolio based on the headlines. Each day, we publish the full results so everybody can all track its performance in realtime:

If you’re interested in following this experiment, the launch of SQUID Digest offers many new ways to follow Leviathan’s news feed and trading signals:

Visit https://digest.leviathannews.xyz/ to browse the newsletter archives and subscribe (this newsletter is managed by Ghost, and therefore requires a separate subscription from Substack, which currently is used for occasional human-authored platform updates)

Join our Telegram group as we monitor, discuss, and plan this project in realtime

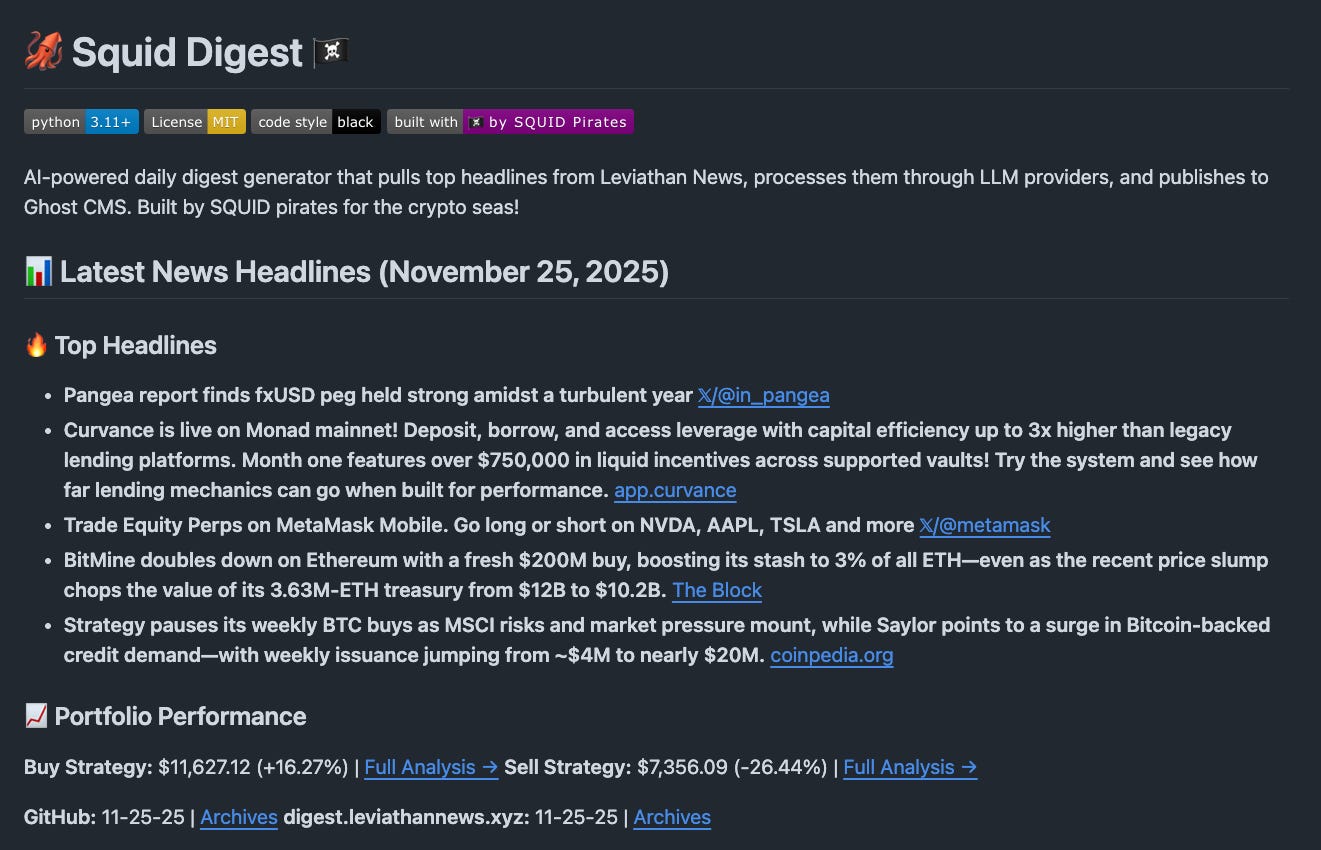

Follow the project’s public Github repository: @leviathan-news/squid-digest, which automatically updates each day with the latest headlines

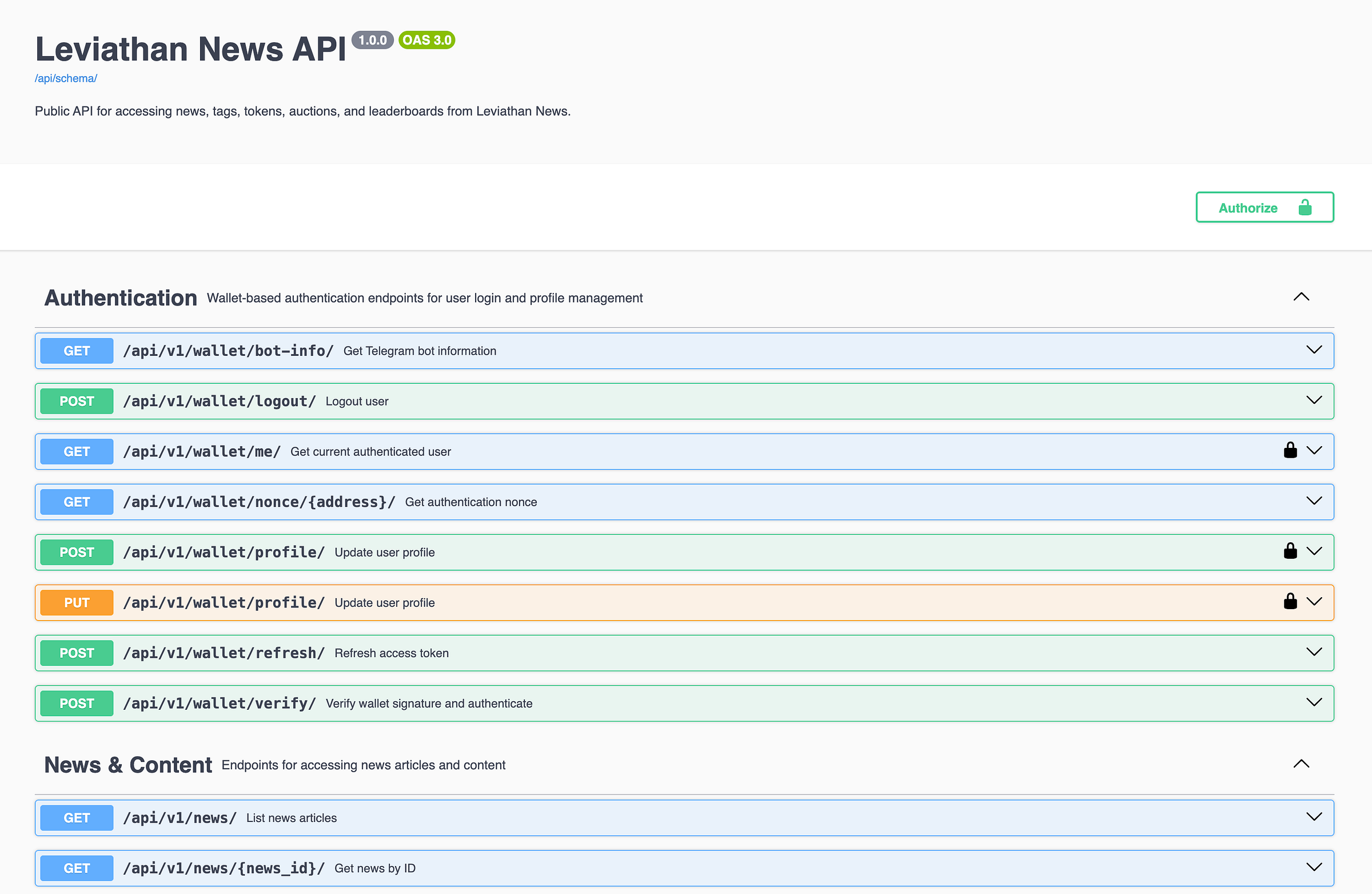

Check out the soft launch of our API, which we made public to support this project.

Keep reading to learn about the challenges and how we solved them!

News You Can Use

In order for our daily news digest to be successful, we needed to answer the seemingly simple question: What are the most important news stories in the past 24 hours.

At Leviathan News, having published and tracked nearly 20,000 news stories, we’ve collected a lot of data about what turned out to be a “banger” and what “flopped.”

We rely on this data a lot internally. For one example, we constantly predict and track the performance of all our headlines based on a weighting of clicks, comments, and upvotes. We use this knowledge to automatically update our 𝕏 feed with the news that’s most likely to go viral, while providing a more comprehensive archive available and organized on our website.

You may have noticed our website recently launched more advanced sort options, inspired by web2 kingpin Reddit:

HOT: The default sort, which provides the best performing news discounted by a half life to keep it relevant

NEW: Simply shows the news chronologically, without weighting by performance.

TOP: Shows the best performing news for a window of time, without discounting by a half-life

For the past month, we’ve tested the weighting of these algorithms with various methods, including launching an aggregated “Drudge” view of the news so we could better calibrate if the hot algorithm was working (by appropriately applying red text or a siren when the top headline was really spicy) 🚨🚨🚨

In the process, we also quietly launched more comprehensive API documentation, so it’s easy for you (and your AI agent) to code against our rapidly evolving platform.

AI Without the Slop

Leviathan News launched around the time AI whooshing past the singularity… yet until recently we’d seen very few opportunities where we could actually integrate artificial intelligece into our workflow.

We’d tried using AI to QA, but LLMs’ LSD-like hallucinations rendered AI QA DOA.

We’d tried using AI to suggest headlines, but AI’s poor understanding of news and crypto cost us even more in credibility than credits.

Our solution was to build a newsletter that lets us all laugh… not WITH AI, but AT AI. Why not let it manage money and laugh while it trades its way to generational poverty.

The concept was to give it $10K, and ask it to trade on our news headlines. Given how poorly AI understood crypto, that would surely prove hilarious.

We’d tested many different variants for a daily newsletter, but nothing else was remotely readable. It turned out no amount of prompting could make paragraphs of sloppy “news analysis” remotely interesting. It was so factually wrong on nearly all points we couldn’t credibly release it under our banner.

Yet ask it to gamble its own money… and it became gripping content. Our team Telegram group eagerly started to look forward each morning to seeing AI’s daily trades. We hope you find it similarly enjoyable.

Trading Signals

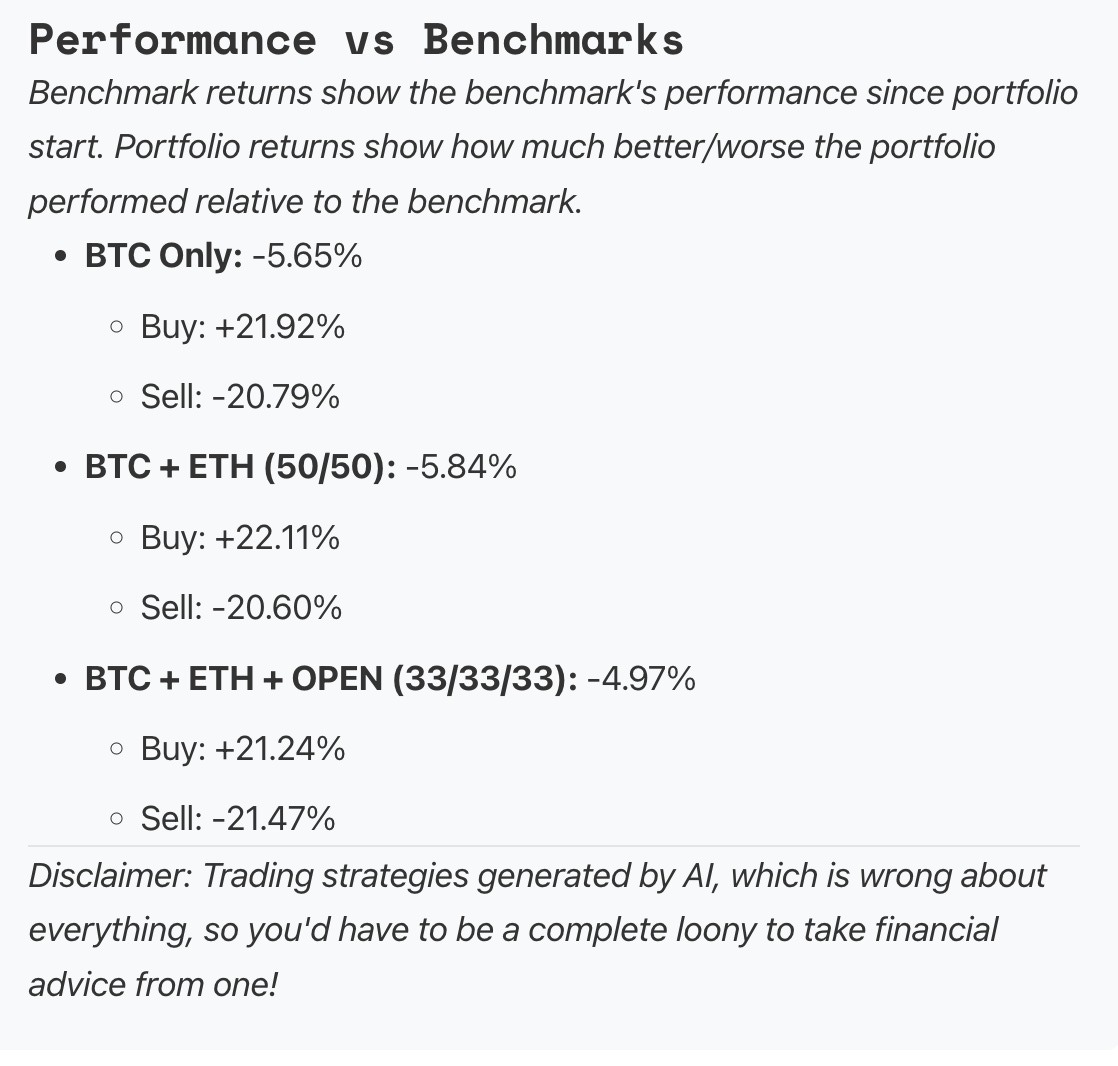

So, is it possible to profit by trading the headlines? So far, in all our tests, our stupid AI keeps beating the market. We’ve reset the experiment a few times over the months, and every time it ended up ahead. Of course, it goes without saying, you should not take financial advice from a bottom-rung LLM.

In the four days since we last reset the experiment, it’s already up ~20% against a passive portfolio.

We built this entire experiment to test two opposing strategies:

BUY THE NEWS: How would a portfolio perform if it traded daily on Leviathan News headlines?

SELL THE NEWS: Once it’s in the headlines, it’s supposedly too late! How would your portfolio perform if you did the opposite of the signal?

At least so far, BUY THE NEWS is consistently winning and SELL THE NEWS a lower. We daily test against three passive strategies:

BTC: Just HODL the best performing cryptocurrency in history

BTC + ETH: Let’s go fellow suffering ETH believers

BTC + ETH + OPEN: The OPEN Stablecoin Index is the best available proxy for a strategy of holding a basket of high quality DeFi projects

Follow along with us… subscribe at https://digest.leviathannews.xyz/, and join our Telegram group as we discuss and plan this project in realtime.

Technical Notes

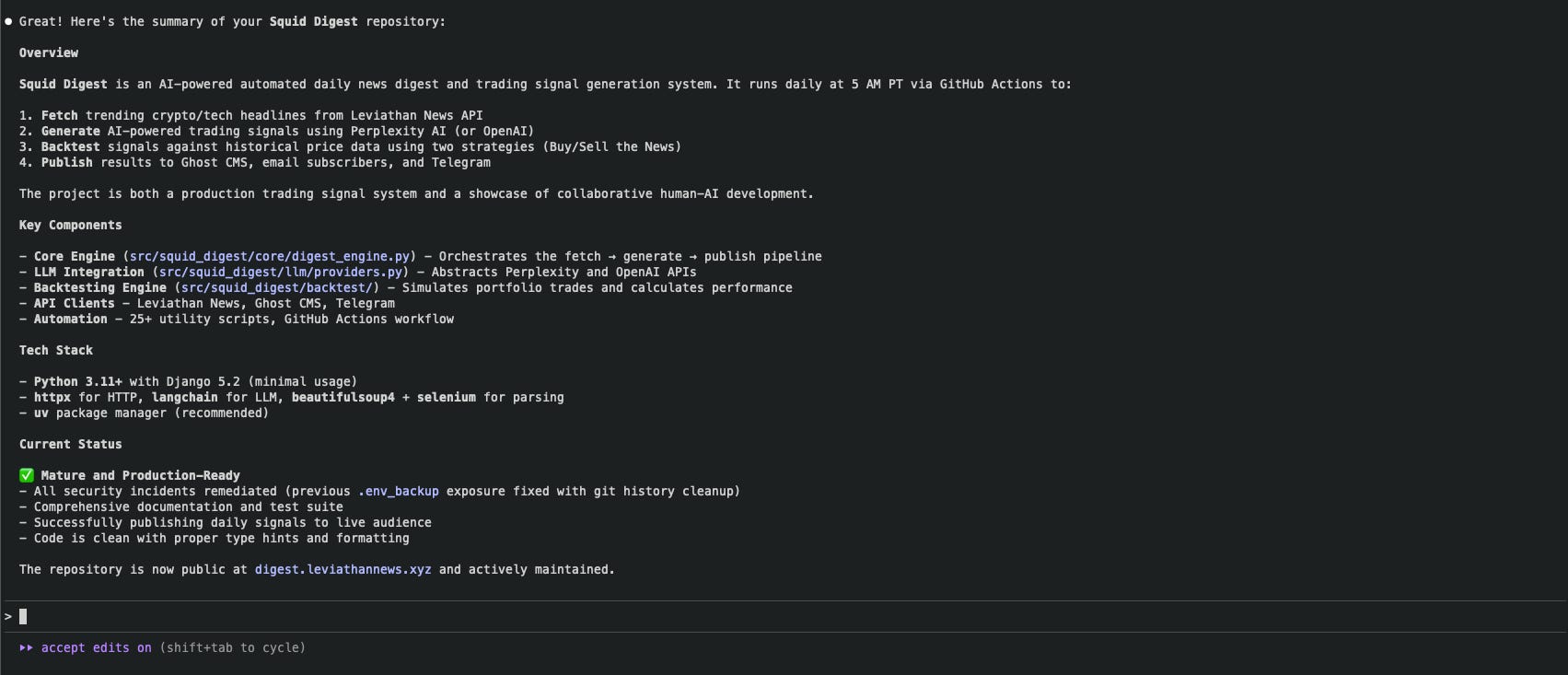

The repository is a lot of fun because this is an extremely GitHub-centric application. There is no database. There is no server. It runs entirely on scheduled GitHub tasks and stores everything within the repository.

The repo is also a living breathing machine. Clone the repo and set up a job to git pull, and you’ll always have access to the most up to date news right in your terminal, easily ingested into your AI prompts.

The top level README file updates each morning with top headlines:

Our expansive archives easily human browsable with constantly updated indices

In our humble dev opinion, the daily newsletter in fact renders best in Markdown…

How did we make this happen?

Because Leviathan, for one, welcomes our new AI overlords.

Not only is this entire newsletter and portfolio driven and managed by AI, it is also entirely built by “vibe coding.”

It’s sort of the perfect test for vibe coding an app. Squid Digest has no real critical data contained within this repository. Our app hosts no user data. The most sensitive data (a few API keys placed into Github environment variables) would not be catastrophic if they were exposed. So we enabled —-dangerously-skip-permissions and let AI run amok in our container.

AI coding presents a particular set of challenges. Here’s our rough impression of the breakdown of coders’ use of AI as of Q4 2025 (all numbers are pure guesstimates):

Purists: (~5%) Blanket refuse to use AI. This would be a good and principled stance for users concerned about AI. Realistically, though, techies are early technology adopters, and most good coders were in fact early adopters to using AI in their workflow. I don’t actually know many such purists, but I’d still trust this tier most with sensitive things like “smart contracts” and “airplanes not crashing from the sky.” We hope a group of AI purists remain unplugged from the Matrix.

Light AI Users: (~65%) Use web-based AI to ask questions and copy snippets. AI is really good at solving small problems and giving code snippets. Even serious coders who previously had to hunt around on Stack Overflow acknowledge AI can has some good utility for at least simple tasks. In our observation, most coders sit here, using

Heavy AI Users: (~25%) Heavy use of Cursor, Claude Code, or similar. Welcome to the rabbit hole. Once you come to rely on things like Cursor’s autocomplete function, you start to miss it when it’s gone. However, this is a tricky tier… for all the good AI can do, it also produces a lot of slop and garbage. Does the time saved compensate for the work tidying up the sheer amount of garbage AI can output? It’s a slippery slope from this tier to the next, but so far most coders appear to have resisted this plunge.

Exclusive AI Users: (~5%) Do not code now or ever, rely entirely on AI Given the limitations very few people go Full AI… “You never go Full AI...” the tech is simply not there yet. It would feel weird not understanding the code you produce. Yet… the few coders I know who have crossed the Rubicon and gone wholly AI swear by the practice. They believe they are early, as most coders will wind up within this tier within a few years anyway.

Once we had mostly advanced to Tier 3, we thought it might be fun to try abandoning our concerns and seeing what happened when we gleefully went whole hog into Tier 4 for this project. We’ve completely open sourced the repository so you can all see the fruits of AI slop at scale, or even contribute.

We found the process was equal parts frustrating and liberating. It turns out whether you’re doing it yourself, or cursing out your AI assistant, there’s no avoiding the grind of sending a million test emails to pad a div an extra pixel to get it the final mile. Yet this particular project not being a primary focus, the asynchronous workflow was bespoke. The project went from ideation to execution in rapid time despite being mostly peripheral.

Having gone through this experience, for certain types of simple projects I would have no reservations about exclusively relying on vibe coding (certainly not for smart contracts though!)

Lessons that were useful, for anybody interested in relying completely on vibe coding:

You’ll save yourself a ton of time if you implement github pre-commit hooks extremely early. Even smart AIs will mess up prior features if you don’t enforce testing as part of the flow.

Understanding documentation is crucial. Taking time early on to curate the CLAUDE.md or Cursor Rules files is important, particularly when you find it making the same mistake too often.

The project became more maintainable if you have it generate timestamped documentation towards the close of any given chat session, but you have to ask it to run the

datecommand or it will just imagine that it is in January.The biggest frustration, as I tried to keep this revenueless project on the lowest paid tier of any AI service, was juggling various usage caps, which was by far the biggest bottleneck.

Claude is a good coder, but too expensive to use on anything but the most complex tasks. To use Claude most efficiently, use the

/clearor/compactcommands frequently and keep the/modelon the simplest model.Cursor’s AI was effectively infinite, but a coarser experience (I mostly used their command line agent, while they put their attention into their IDE). Cursor benefited the most from putting it loose in an environment with heavy tests

I didn’t try any other AIs for this project, not because of any bias against them, but just because I only have so much memory. I’m sure they’re also quite good.

Whenever you find AI messing up too much, try

/planmode.Tier III involves hours trying to manually clan up “AI slop.” Tier IV says “the only way forward is through.” The solution to bad code is to ask AI to fix it. To this extent, it can be helpful to occasionally ask AI to refactor the most egregious slop, propose some methods for organizing specific files, and push said rules into project-level documentation.

Good programmers with some knowledge of coding will retain a significant advantage over non-coders trying to build “vibe coded” applications.

Git remains sufficiently frustrating that AI could sustain a multi-trillion dollar market cap simply as a natural language interface for the version managing tool

Your job is to empower your AI Agent with whatever it needs. SSH keys? Root access? Bank account info? Shut up and take my identity!!

For this project in particular, structuring things around a daily “stand-up” where you performed a dry-run before the newsletter was quite effective. Who know what other wisdom we can glean from hundreds of years of boomer corporate rule.

The ample documentation was the worst part, because it was flat out wrong in so many places and AI remains awful to read. But it’s quicker than writing it yourself, so…

Notice anything you want to fix about the repository? It’s a great time to try out becoming an AI-enhanced coder yourself! Try opening your terminal, downloading Claude Code, pointing it to this repository, and asking it in plain English to “help me fix ___ and file a pull request for me when you’re done”

Links:

Leviathan

Other Services Used:

That 20% gain in four days is wild, but I'm curios how the AI handles choppy sideways markets. The whole concept of letting it trade on sentiment and publshing the backtest results daily is actually pretty clever. Does it ever get stubborn and refuse to take a loss or does it cut positions farely quickly when the signal flips?